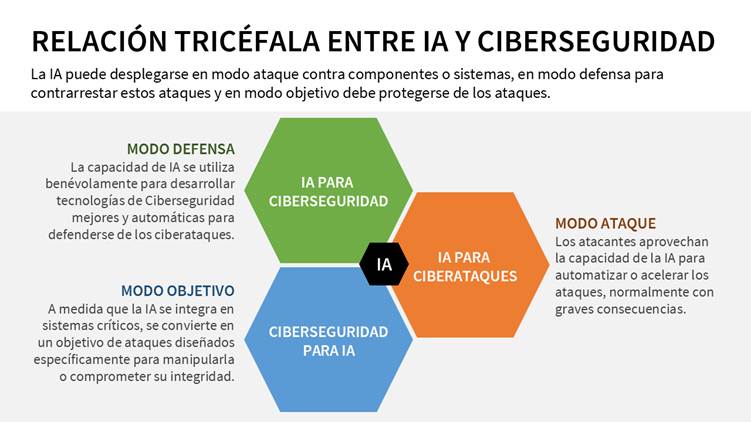

When we think about AI and cybersecurity, the image of security engineers using AI as an ally in their fight against cybercrime often comes to mind. While that image is entirely true, it only represents a small part of the whole picture. Today, the use of AI has become so democratized that cyberattackers routinely use it to optimize and scale their attacks. According to the ENISA 2024 threat report, more than 50 % malicious actors already integrate AI tools into their campaigns. On the other hand, the massive deployment of AI models in critical sectors could only attract the attention of malicious actors: cyberterrorism, cybercrime, cyberwarfare, etc.

This boom in the use of AI tools, unprecedented in the history of technology, is generating a complex dynamic that can be understood as a three-headed relationship:

- AI as a cyber defense tool

- AI as an offensive vector in the hands of attackers

- AI as a vulnerable target requiring protection.

This three-pronged approach (AI for cybersecurity, AI for cyberattacks, and cybersecurity for AI) reflects the new digital battlefield where cyberattackers and cyberdefenders battle.

AI for Cybersecurity: Defense Mode

In its defensive form, AI is consolidating as the trigger for the automation of threat detection, response, and prevention systems. Machine Learning (ML) algorithms make it possible to identify anomalous patterns in network traffic, detect intrusions with greater precision than traditional signatures, and generate adaptive responses in real time. Technologies such as AI-based threat hunting, he User and Entity Behavior Analytics (UEBA) or the Intelligent Intrusion Detection Systems (IDS) They are already common components in security operations centers (SOC).

In recent advances, the use of foundational models stands out, such as Transformers adapted to cybersecurity, capable of analyzing millions of events per second and correlating complex incidents. Likewise, the use of generative AI to automate incident reporting and advise on strategic decision-making is making a substantial operational difference.

AI for Cyberattacks: Attack Mode

The same capability that makes AI an ally in cyber defense makes it a formidable weapon in the hands of cyberattackers. In attack mode, AI is used to automate highly personalized phishing campaigns, evade detection systems, generate polymorphic malware, and carry out automated recognition in the initial stages of intrusion. A paradigmatic case is the use of generative language models to construct social engineering emails with a level of persuasion and contextualization that surpasses that of human attackers.

The use of deepfakes has become a key threat in personal relationships, where fake videos are created to defame or manipulate individuals, affecting their personal and professional lives. In the political sphere, we could see democracies weakened by leaders who deny their crimes under the excuse "it's a deepfake." In the personal sphere, human relationships could face an unprecedented crisis of trust, where constant suspicion poisons communication and interpersonal bonds. In fact, a cybersecurity discipline has been created to combat them: disinformation security.

Cybersecurity for AI: The Objective Mode

Finally, as AI systems become embedded in critical infrastructure—from healthcare to energy to defense—they become targets for attack in their own right. Ensuring their integrity, availability, and confidentiality means addressing the rise of so-called adversarial attacks: techniques that manipulate the input data of an AI system to induce systematic errors, such as injection of prompts, model theft (model stealing), the inference of sensitive data from model outputs, and data poisoning of training sets. These attacks allow, for example, a computer vision system to misinterpret traffic signs, with potentially catastrophic consequences for autonomous vehicles.

The need for AI-specific cybersecurity has given rise to the emerging field of AI Red Teaming, which assesses the resilience of models to sophisticated attacks, and the development of regulatory frameworks, such as the one proposed by NIST, AI Risk Management Framework (AI RMF), or by the European Union, with its AI Act, which establish principles of robustness, transparency and auditability, without forgetting the recent standard ISO 42001 Information technology — Artificial intelligence — Management system, so that organizations can develop and use artificial intelligence responsibly, ethically, and in compliance with current regulations.

Conclusion

The three-pronged relationship between AI and cybersecurity imposes a new agenda for research, regulation, and professional training. Understanding and anticipating the evolution of these three spearheads (defense, attack, and targeting) will be essential to ensuring a secure and resilient digital ecosystem. Only a joint strategy between researchers, practitioners, and regulators will be able to anticipate the advance of increasingly autonomous and chameleon-like threats. It's time to think of cybersecurity not only as technology, but as a culture and shared responsibility.

Get to know our Professional Master's Degree in Cybersecurity Management, Ethical Hacking, and Offensive Security.